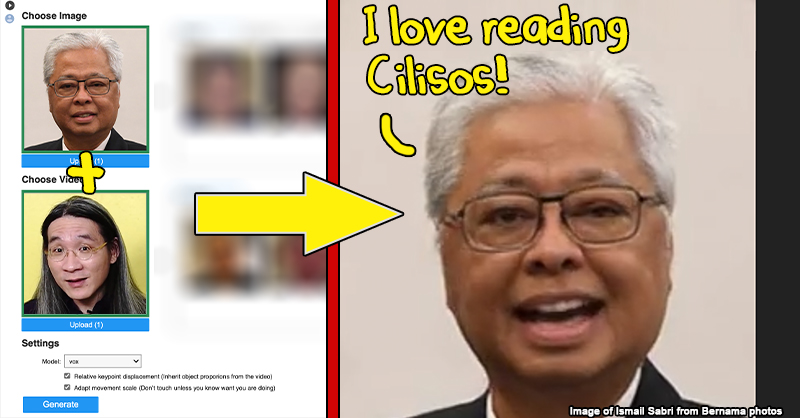

We deepfaked a video of Ismail Sabri promoting Cilisos. Here’s how convincing it was

- 383Shares

- Facebook334

- Twitter7

- LinkedIn7

- Email14

- WhatsApp21

In case you’re expecting this to just be clickbait, sorry to disappoint you but we actually did it. You can see the videos below:

1. Ismail Sabri

2. Anwar Ibrahim

3. Mahathir

In case you can’t tell, these are all fake – more specifically deepfakes. We made them to follow the movements and voice of our video editor, Aaron. These might not be the greatest deepfakes ever made, especially with how uwu Ismail Sabri’s eyes are, but we did these in just minutes with absolutely no technological know-how beyond googling and reading instructions.

But this isn’t the first time a politician was deepfaked, as the alleged sex video with Azmin Ali in it was said to be made using this technique. And more recently, there were leaked videos that are allegedly of Malaysia’s favourite dermawan Ebit Lew caught in 480p having sexual video chats, and these too were claimed to be deepfaked.

So then we decided to check two things: susah ke want to do deep fakes, and just how good is the technology now? But first, let’s find out how this works.

It started with porn (it always starts with porn)

Despite the term being relatively new, research into this technology has been done since the 90s before our intern was even born. One of the earliest ones was a software called Video Rewrite, released in 1997, which could be used to lipsync a person’s video to match a different audio – basically making it seem like they’re saying it. You can see how this technique has progressed since then in this deepfaked video of Obama.

But the term deepfake itself only started in November 2017, when Reddit user u/deepfake created his own subreddit and started sharing porn with celebrities’ faces swapped into them. That subreddit has since been banned, but even then porn is still the driving force behind most deepfakes shared online, as nearly 96% of it is porn.

But it’s not all porn, just most of it. In fact, you’ve probably used the technology before. If you’ve used the Reface app before which swaps your face onto gifs and celebrity movie clips then shared it online, you’re probably the 4% that shares non-porn deepfake, as it’s made using the same exact technology.

Now, you’ll probably notice from these examples that deepfakes doesn’t just refer to face swapping, as it could also be used to refer to lipsyncing. The third form of deepfakes is called puppet master – the one used to make our fake politician videos – where the person’s facial expressions and movements are matched to another video.

As to how you make it in the first place…

You create it by ‘teaching’ an AI about the person you want to deepfake

There are a few ways to make a deepfake, but no matter whether it’s lipsyncing or face swapping, it’s all done through something called deep learning. How it works is that a neural network – which is basically an artificial intelligence (AI) software that learns like a human brain – is fed images and videos it can learn from.

So for example, if we want to swap our face onto an Aaron Aziz movie clip cause we nak acah-acah jadi movie star, first we’ll train the AI by feeding it some videos of the target we want to replace – in this case ones containing Aaron Aziz – and it’ll learn how his face looks like from all the different angles.

We’ll then feed the AI some pictures of our humble face, which it will then learn and use to replace Aaron Aziz’s face in those video clips. (Sorry AI machine for feeding you pictures of our face)

Once that’s done, you now have what’s called a ‘trained model’ of Aaron Aziz who is a trained model, and now you can even swap other faces onto that particular clip it was trained on.

Previously, you would need to feed the AI hundreds or thousands of images for it to learn, which is why celebrities and politicians tend to be the target of deepfakes – there are tons of their images floating around on the internet. But depending on how good your PC setup is, this could take days or even weeks to train the AI.

But deepfake technology has advanced so much, in some cases you might just need one image and one video.

We made a deepfake in the time it takes to cook Maggi

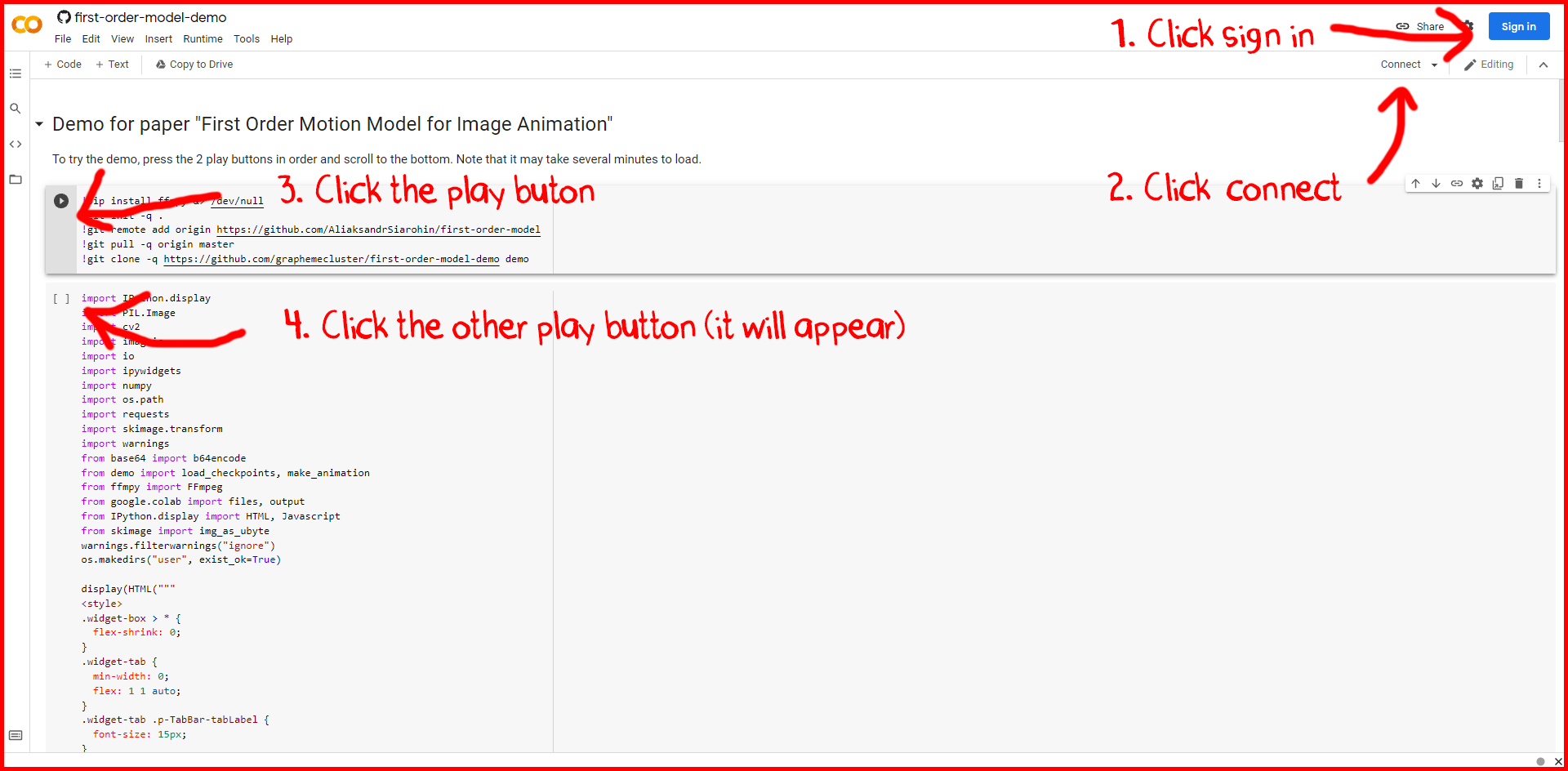

After going through a few deepfake codes online, we found basically the simplest one that didn’t require extensive programming knowledge or canggih machines, because to us Python is just a snake and our laptop is a brick.

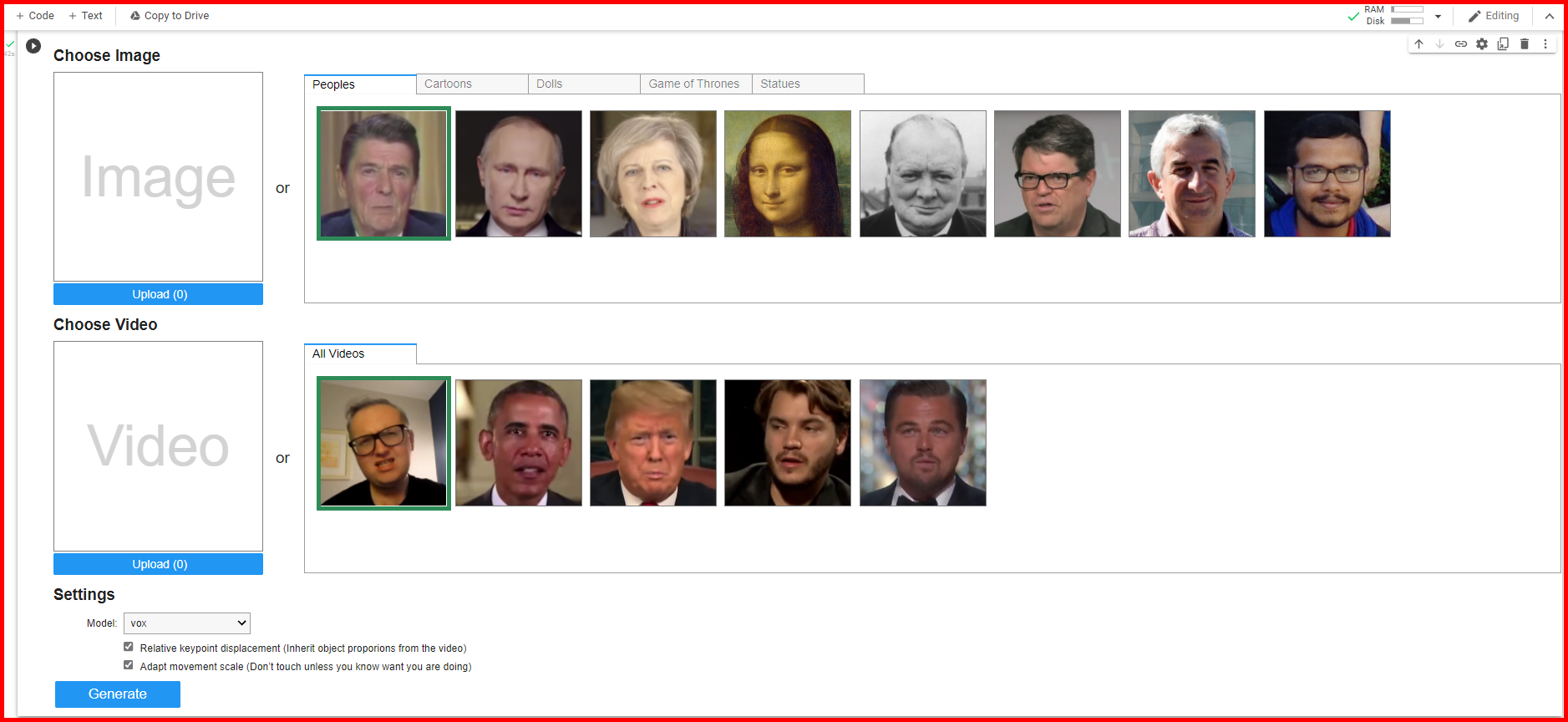

This deepfake software is called First Motion Order Model, which is a form of puppet master deepfakes, and it was released in 2020. Instead of the previous method that teaches the AI specific faces to learn about, this AI was trained to recognise faces in general and their movements. So once the AI has learned about the human face, it only requires one picture and one video to create a deepfake. Choose your subject and the target video you want it to mimic, and within minutes we had Anwar Ibrahim telling people to like and share Cilisos.

It’s so easy there are a few guides you can follow on how to do it. But a simpler method is to go straight to the demo page, then click the buttons shown in order.

Then scroll all the way to the bottom and wait, and this should appear there.

Just make sure the pictures and videos uploaded are 256 x 256 pixels, and make sure the video is only a few seconds long or you might get an error.

Of course, the video is far from perfect. As you can tell, there are quite a few signs that it looks a bit off, plus there’s a weird glitch at the end of the video which we decided to leave in for wtf purposes.

But if there’s one thing to takeaway from it: deepfakes now need much fewer images for it to learn from, and non-techies such as us writers can do a basic one in the time it takes to cook Maggi.

This version is quite advanced and is already one year old, so with how technology is, things are only going to get better and easier in the future.

In case you’re suddenly thinking, “WTF Cilisos why teach people how to use it??? Later they make porn how???” But we do believe it’s better to educate people on how something works and that it exists, because knowledge helps you make better decisions. Now that you know how good or bad a deepfake can look, besides being able to tell if something is faked, it can’t always be used as an escape and claim any compromising video of you is a deepfake.

Also, as we’ve mentioned a few times the quality isn’t that great, and you still need actual programming and video editing skills to do a convincing deepfake because…

Creating a good deepfake is still hard okay

If it’s any consolation, doing a very convincing deepfake is still relatively hard. We went through some uh… artistic deepfakes of celebrities for research purposes to check how good they are, and even though the quality was good (the deepfake, we mean cough cough) it still wasn’t completely convincing due to the differences in head shapes, so it sorta looks like them but not quite. So it looks like a cousin or relative of the celebrity instead of the celebrity themselves.

There have been really convincing non-artistic ones done though, and you might have seen these really good deepfakes of Tom Cruise on Tiktok or YouTube before, and these actually involved a lot of work.

To get this level of quality, they hired a Tom Cruise impersonator in order to match his face shape, body size, and mannerisms to get the same vibe. One of the biggest issue with deepfake is that you still have to match all of the above or the video will not look very convincing.

And besides the deepfake tech, they still had to do some old-school video editing work to touch it up, and even then there were still some issues that cropped up, such as Tom Cruise’s face glitching when he put on his sunglasses.

So it’s still possible, but it would need a whole lot more work than just a picture of the dude you want to deepfake. Which brings us to the hot deepfake topic now: Ebit Lew’s leaked videos.

Well… after learning about deepfakes and viewing the Ebit Lew videos ourselves, our advice is to not check it out if you don’t want to get scarred for life. But besides that, we can’t give a verdict about it simply because we’re not experts on it, and no matter the truth there’ll always be defenders claiming otherwise.

But let’s just say that doing a really good, convincing deepfake of Ebit would need a VFX editor, a techie guy to handle the deepfaking part, and an actor with a similar build who can mimic his mannerism. So if it is a deepfake, the team that made it will have to be really, really good to get it off.

- 383Shares

- Facebook334

- Twitter7

- LinkedIn7

- Email14

- WhatsApp21